At this year’s Japanese Association for Digital Humanities conference, as well as giving a keynote on digital infrastructure, I also presented this poster on the specific example of full-text digital library APIs being used in ctext.org and for teaching at Harvard EALC.

Abstract

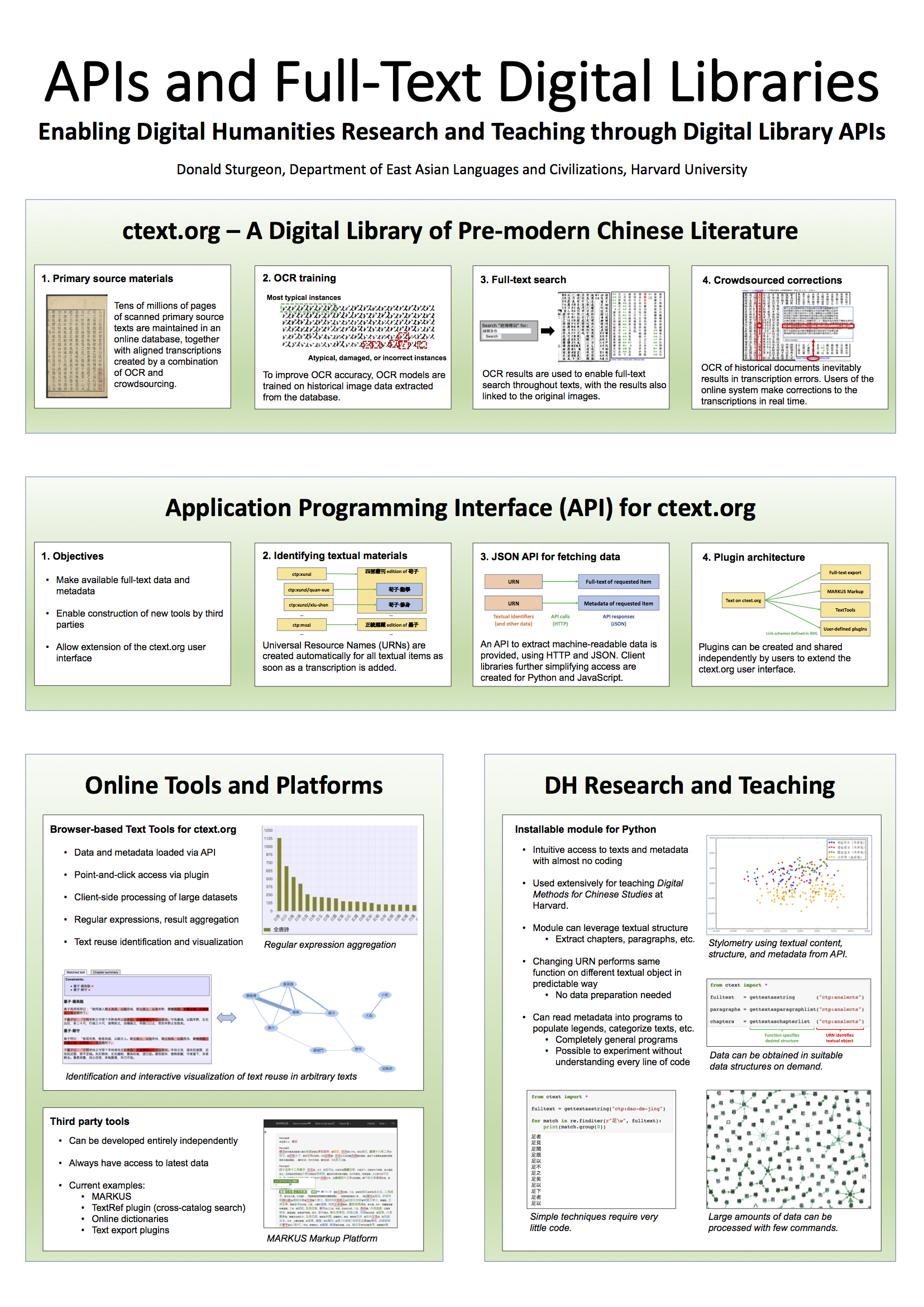

As digital libraries continue to grow in size and scope, their contents present ever increasing opportunities for use in data mining as well as digital humanities research and teaching. At the same time, the contents of the largest such libraries tend towards being dynamic rather than static collections of information, changing over time as new materials are added and existing materials augmented in various ways. Application Programming Interfaces (APIs) provide efficient mechanisms by which to access materials from digital libraries for data mining and digital humanities use, as well as by which to enable the distributed development of related tools. Here I present a working example of an API developed for the Chinese Text Project digital library being used to facilitate digital humanities research and teaching, while also enabling distributed development of related tools without requiring centralized administration or coordination.

Firstly, for data-mining, digital humanities teaching and research use, the API facilitates direct access to textual data and metadata in machine-readable format. In the implementation described, the API itself consists of a set of documented HTTP endpoints returning structured data in JSON format. Textual objects are identified and requested by means of stable identifiers, which can be obtained programmatically through the API itself, as well as manually through the digital library’s existing public user interface. To further facilitate use of the API by end users, native modules for several programming environments (currently including Python and JavaScript) are also provided, wrapping API calls in methods adapted to the specific environment. Though not required in order to make use of the API, these native modules greatly simplify the most common use cases, further abstract details of implementation, and make possible the creation of programs performing sophisticated operations on arbitrary textual objects using a few lines of easily understandable code. This has obvious applications in digital humanities teaching, where simple and efficient access to data in consistent formats is of considerable importance when covering complex subjects within a limited amount of classroom or lab time, and also facilitates research use in which the ability to rapidly experiment with different materials as well as prototype and reuse code with minimal effort is also of practical utility.

Secondly, along with the API itself, the provision of a plugin mechanism allowing the creation of user-definable extensions to the library’s online user interface makes possible augmentation of core library functionality through the use of external tools in ways that are transparent and intuitive to end users while also not requiring centralized coordination or approval to create or modify. Plugins consist of user-defined, sharable XML resource descriptions which can be installed into individual user accounts; the user interface uses information contained in these descriptions – such as link schemas – to send appropriate data such as textual object references to specified external resources, which can then request full-text data, metadata, and other relevant content via API and perform task-specific processing on the requested data. Any user can create a new plugin, share it with others, and take responsibility for future updates to their plugin code, without requiring central approval or coordination.

This technical framework enables a distributed web-based development model in which external projects can be loosely integrated with the digital library and its user interface, from an end user perspective being well integrated with the library, while from a technical standpoint being developed and maintained entirely independently. Currently available applications using this approach include simple plugins for basic functionality such as full-text export, the “Text Tools” plugin for textual analysis, and the “MARKUS” named entity markup interface for historical Chinese texts developed by Brent Ho and Hilde De Weerdt, as well as a large number of external online dictionaries. The “Text Tools” plugin provides a range of common text processing services and visualization methods, such as n-gram statistics, similarity comparisons of textual materials based on n-gram shingling, and regular expression search and replace, along with network graph, word cloud, and chart visualizations; “MARKUS” uses external databases of Chinese named entities together with a custom interface to mark-up texts for further analysis. Because of the standardization of format imposed by the API layer, such plugins have access not only to structured metadata about texts and editions, but also to structural information about the text itself, such as data on divisions of texts into individual chapters and paragraphs. For example, in the case of the “Text Tools” plugin this information can be used by the user to aggregate regular expression results and perform similarity comparisons by text, by chapter or by paragraph, in the latter two cases also making possible visualization of results using the integrated network graphing tool. As these tasks are facilitated by API, tools such as these can be developed and maintained without requiring knowledge of or access to the digital library’s code base or internal data structures; from an end user perspective, these plugins do not require technical knowledge to use, and can be accessed as direct extensions to the primary user interface. This distributed model of development has the potential to greatly expand the range of available features and use cases of this and other digital libraries, by providing a practical separation of concerns of data and metadata creation and curation on the one hand, and text mining, markup, visualization, and other tasks on the other, while simultaneously allowing this technical division to remain largely transparent to a user of these separately maintained and developed tools and platforms.